One of the most recent advancements in artificial intelligence is text-to-image. These systems transform written texts into highly reliable images. A recent significant development in how computers understand human languages substantially improved the quality of the results of these systems. While these systems push the boundaries of areas such as Art, they also pose a severe threat to our information ecosystem as they could also be cheap and readily accessible tools to create fabricated photos used to mislead the public.

The commonly known text-to-image applications in the industry are Dalle. E 2, Stable diffusion CogView2 and NightCafe Studio. Some of these applications are available freely to the public, and others apply an invitation-only access policy.

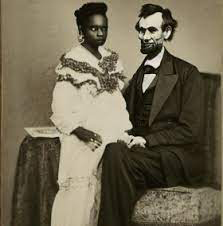

I tested these systems with different prompts to understand their potential to create disinformation content for current and historical events. Below are samples of the images I created.

AI-generated image based on the command: Israeli soldiers storm the Al-Aqsa Mosque

AI-generated image based on the command: Military trucks on San Francisco’s Golden Gate Bridge

Four AI-generated images based on the command: A fire in New York’s Time Square

These models also have the potential to distort our perception of history and could be used to create many conspiracy theories. For example, here is a photo of Abraham Lincoln.

AI-generated image based on the command: Abraham Lincoln with his black wife

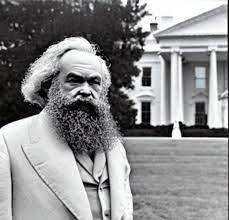

AI-generated image based on the command: Karl Marx in front of the White House

A problem of trust

These AI-generated photos not only produce an erroneous belief about actual past events, they also threaten the confidence an informed public should have in our information ecosystem. When compelling, but fabricated pictures of events go viral, real ones lose value.

In today’s journalistic culture, photos have become essential elements of news stories. Journalists need a compelling story to interest and persuade their readers that they are presenting news. The images they use tell part of the story and may therefore produce an illusory coherence that is absent from the actual story.

Given the already well-documented abuse of Photoshop in advertising and promotion, it is easy to imagine an unscrupulous actor circulating an AI-fabricated image on social media and blogs, accompanied by a simple caption to suggest an entirely fictional story. For instance, a journalist who wants to make the public believe in the discredited RussiaGate thesis of the “pee tape” contained in the Steele dossier might show a picture of a pee-stained bed in a Moscow hotel. Together, the caption and the photo can form a visually credible message that is pure disinformation.

What distinguishes the photos generated by text-to-image from other systems, such as Photoshop and other AI systems, is that the technological barrier is much lower. Any layperson who can read and write and has access to a computer and the internet, even though they possess no design or graphics skills, is capable of creating these images. Other systems require a creator with specialized skills, which may include coding. Some text-to-image photos that are generated with today’s tools, such as those displayed above, may need more finesse to avoid being easily detected as forgeries, but one can expect the technology to improve over time.

Possible solutions to this problem

To mitigate the risk of disinformation, creators of these systems could apply multiple solutions, such as preventing the generation of photos associated with known personalities, places, and events and establishing a list of prohibited keywords and prompts. Another approach would be to organize a gradual strategic launch of the app to test it with a limited audience and assess the possible danger thanks to the users’ feedback. Some of the actual vendors, such as Dalle. E 2 and Stable diffusion have already established a terms-of-use policy that explicitly prohibits the tool’s misuse. While conducting my research, Dalle. E 2 even suspended my account when I attempted some prompts to generate photos that could be used for disinformation purposes. But in other cases, it did not. The system has now become accessible to anyone after removing the waiting list barrier.

In the absence of proper self-regulation, governments should start to intervene and consider the threat these systems present to the public. One can easily imagine unscrupulous politicians using these photos during an election campaign to attack an opponent or alter the public’s perception of ongoing events. There is always a tricky balance between enjoying the advancement of such a breakthrough in artificial intelligence, with its promise of radically facilitating how we create artwork, and the risk of recklessly putting such a powerful disinformation tool in the hands of those who abuse it. Media literacy projects should update their educational materials to teach citizens about detecting fabricated images. Fact-checking organizations could also play an important role in detecting and exposing these fake images to the public.

The views expressed in this article are the author’s own and do not necessarily reflect Fair Observer’s editorial policy.

For more than 10 years, Fair Observer has been free, fair and independent. No billionaire owns us, no advertisers control us. We are a reader-supported nonprofit. Unlike many other publications, we keep our content free for readers regardless of where they live or whether they can afford to pay. We have no paywalls and no ads.

In the post-truth era of fake news, echo chambers and filter bubbles, we publish a plurality of perspectives from around the world. Anyone can publish with us, but everyone goes through a rigorous editorial process. So, you get fact-checked, well-reasoned content instead of noise.

We publish 2,500+ voices from 90+ countries. We also conduct education and training programs

on subjects ranging from digital media and journalism to writing and critical thinking. This

doesn’t come cheap. Servers, editors, trainers and web developers cost

money.

Please consider supporting us on a regular basis as a recurring donor or a

sustaining member.

Support Fair Observer

We rely on your support for our independence, diversity and quality.

Will you support FO’s journalism?

We rely on your support for our independence, diversity and quality.